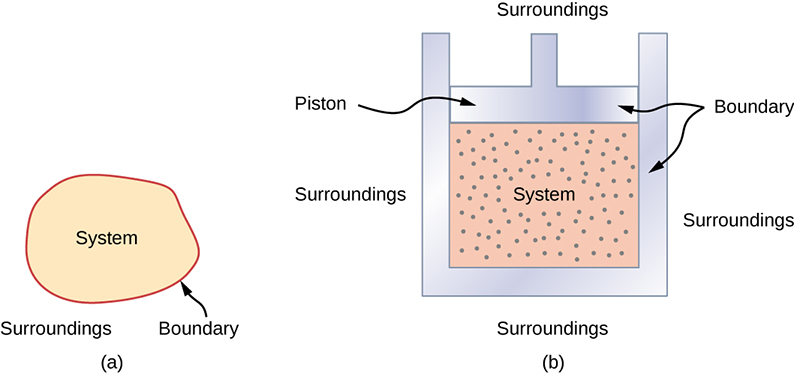

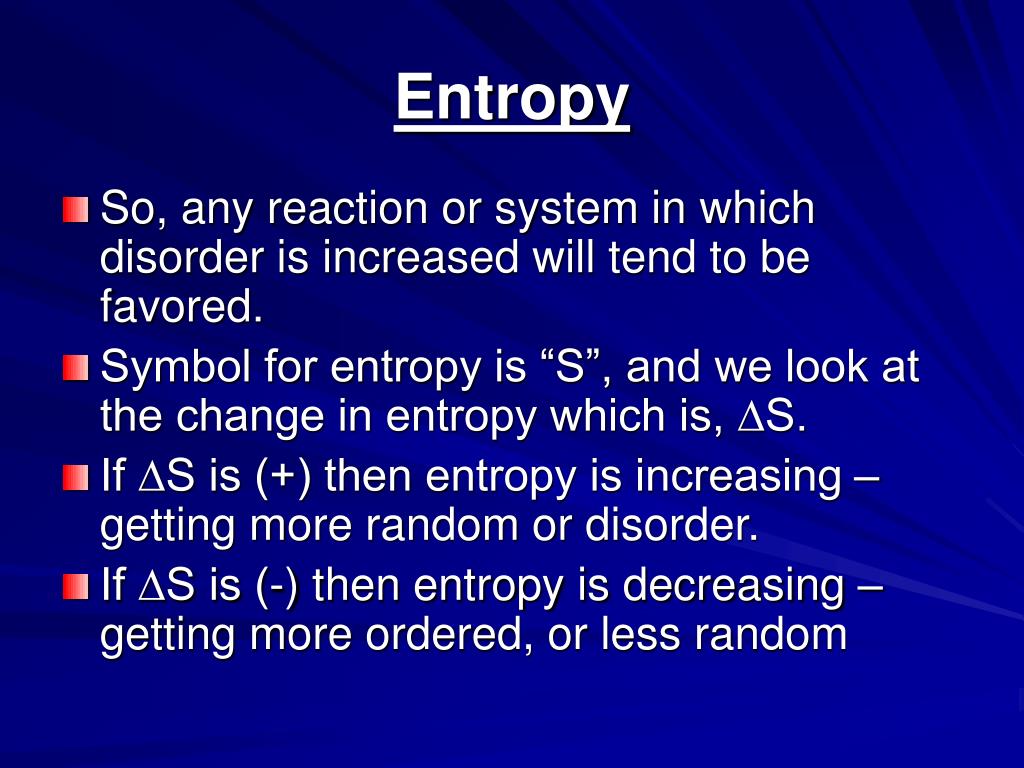

In the rule of chemical reactions, the changes in entropy occur as a result of the rearrangement of atoms and molecules that change the initial order of the system. A system with a great degree of disorderliness has more entropy.Įntropy is a factor of state function i.e., its value does not dependent on the pathway of the thermodynamic process and it acts as the determinant of only the initial and final state of the system. That is why we calculate the Entropy Change.Įntropy Change can be defined as the change in the state of disorder of a thermodynamic system that is associated with the conversion of heat or enthalpy into work. Entropy gives us a measure of that.Īs is clear from the law of thermodynamics that energy can neither be created nor destroyed but can be converted from one form to another, it is not possible to signify entropy at a single point, and hence, it can be measured as a change. Since energy gives the ability to get work done, it is practically impossible for all the energy to be used in doing work. In simpler words, entropy gives us an idea about that portion of energy that does not convert into work done and adds to the disorder of the system instead. the more is the spontaneity in a thermodynamic process, the higher is its entropy or the degree of disorder. It helps redefine the second law of thermodynamics.Įntropy relates to spontaneity i.e. Entropy is an interesting concept as it challenges the belief of complete heat transfer. The entropy is denoted by ‘S’ and it is an extensive property because the value of entropy or Entropy Change is dependent on the substance present in a thermodynamic system.

The term disorder denotes the irregularity or lack of uniformity of a thermodynamic system. This randomness could be in regards to the entire universe or a simple chemical reaction or something as simple as the heat exchange and heat transfer. So, let's understand this concept of entropy and the change in entropy.Įntropy is the measure of disorder or randomness. By this law, the entropy of the universe can never be negative. The second law of thermodynamics talks about the concept of entropy and tells that the entropy of the universe is always increasing. Three laws govern the science of thermodynamics and here we will discuss the second law of thermodynamics. It also deals with the work done for the conversion of energy from one form to another. Here is given the Entropy formula how it can be used to calculated the value of surroundings with different equations.Thermodynamics is the study of the changes in energy associated with the change in temperature and heat. In the case of gas particles, Entropy is generally higher when compared to solid ones.

#Example of entropy free#

For example, in case of solid where particles are not free to move frequently, the Entropy is less as compared to gas particles that can be disarranged in a matter of minutes. Entropy is basically a thermodynamics function that is needed to calculate the randomness of a produce or system. This was second lay of thermodynamics where the concept of Entropy came into existence.

I hope you must have a better idea of Entropy here with this example. So, from an ordered stage, perfume reached to disordered stage by spreading throughout the room. Another good example to state the definition of Entropy is Spraying perfume at one corner of the room, so what will happen next? The perfume will not stay in one corner only but its fragrance can be felt everywhere. The higher the entropy, the more ways the system is disordered. Let us see how it works actually.Įntropy is defined as the total number of ways how a system can be arranged. Obviously, there are multiple ways to arrange the bag of ball and entropy concept is somewhat similar in Chemistry. Repeat the process until the balls are not ended in the bag.

Now draw one more ball from the bag and try to find out the answer of same question. Take an example that you have a bag of balls and if you draw one ball from the bag then how many possible ways to arrange the balls together on the table.

0 kommentar(er)

0 kommentar(er)